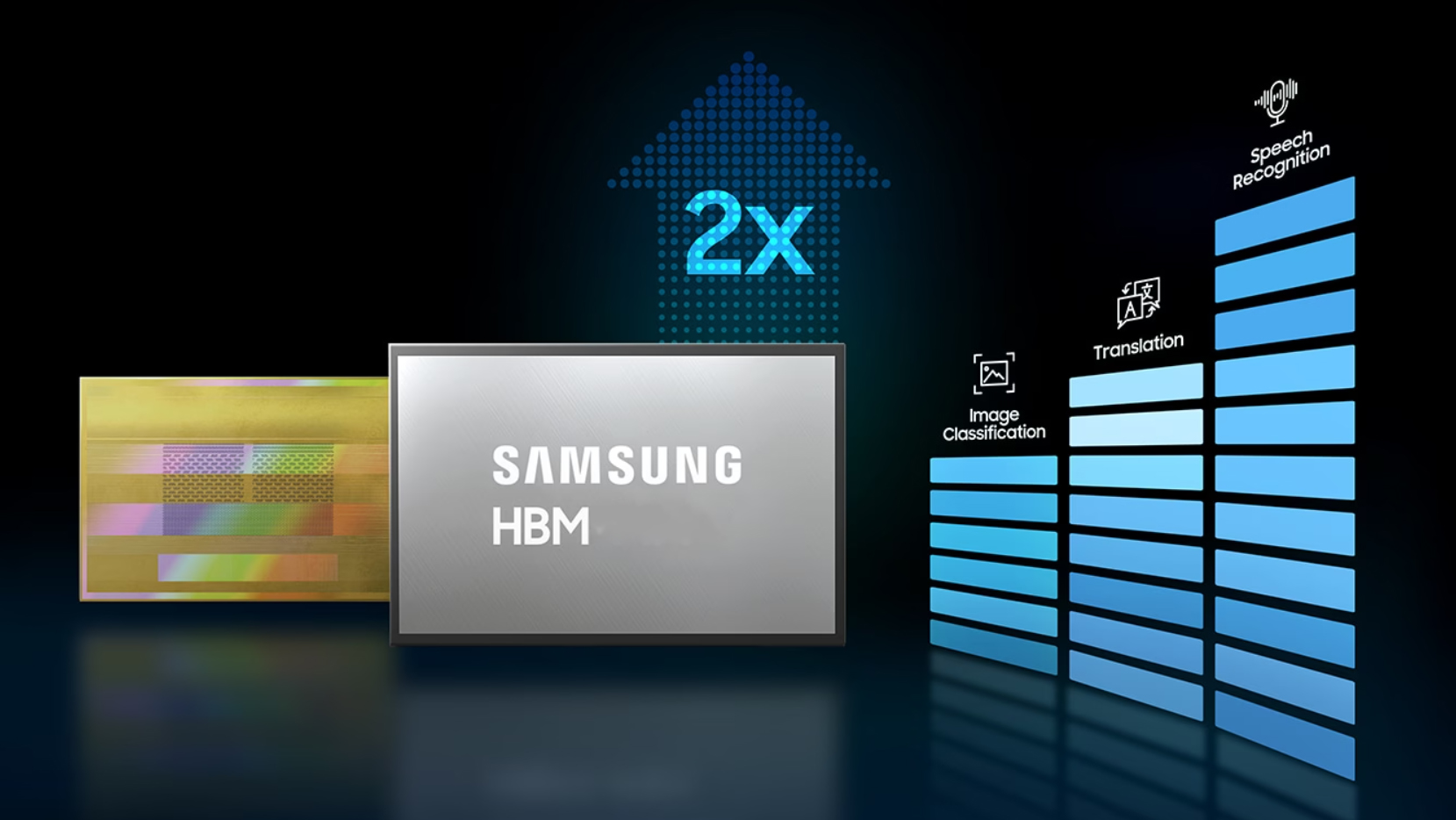

The next generation of HBM4 memory brings significantly greater data transfer, AI will be immeasurably more powerful

The HBM4 memory of the next generation could experience a huge jump in performance, primarily memory bandwidth, which could be doubled compared to the current generation. At the same time, it could have a big impact on the existing industry and field of application, since it is important to point out that the HBM memory standard has not received an improvement in the memory interface since 2015.

While this advancement looks great on paper, it comes with many unknowns, mainly related to how manufacturers will manage data transfer rates and the necessary changes to the memory usage mechanism that allows system memory to be used as a temporary data store and act as a FIFO. buffer.

HBM4 will make the AI even more powerful

Currently, the industry is undergoing a phase of integration of the HBM3e standard with the latest AI GPU chips, which could reach a bandwidth of up to 5 TB/s per chip, bringing a serious performance boost in applications with the very popular NVIDIA H100 GPU accelerators of artificial intelligence algorithms.

armadainternational

armadainternationalDigiTimes reports on the development claiming that Samsung and SK Hynix are moving towards integrating “2000 I/O” ports on their next-generation HBM4 memory standard. In layman’s terms, this means that the process will have much higher computational capabilities along with support for incomparably more complex LLM artificial intelligence modules, which is a key factor in the next generation approach to AI development that can independently create and create.

The artificial intelligence industry is currently in a paradigm shift, with the creative capabilities of artificial intelligence being injected into consumer applications, which has led to the creation of a kind of technological creature of incredible power and strength in perspective, and that only because of a kind of race in which the most powerful companies will show their strength .

This eventually ended up with a huge demand for AI GPU processors, which require HBM as a primary component for high-speed operation, and currently memory manufacturers are focused on delivering adequate products to meet the demand. Innovations in the HBM market are indeed inevitable, however, they will not happen soon, at least not in the coming years, unless something is “cooking” behind the scenes, which we are not yet aware of, reports wccftech.