The first AMD Instinct MI300X GPU AI accelerators are arriving to customers

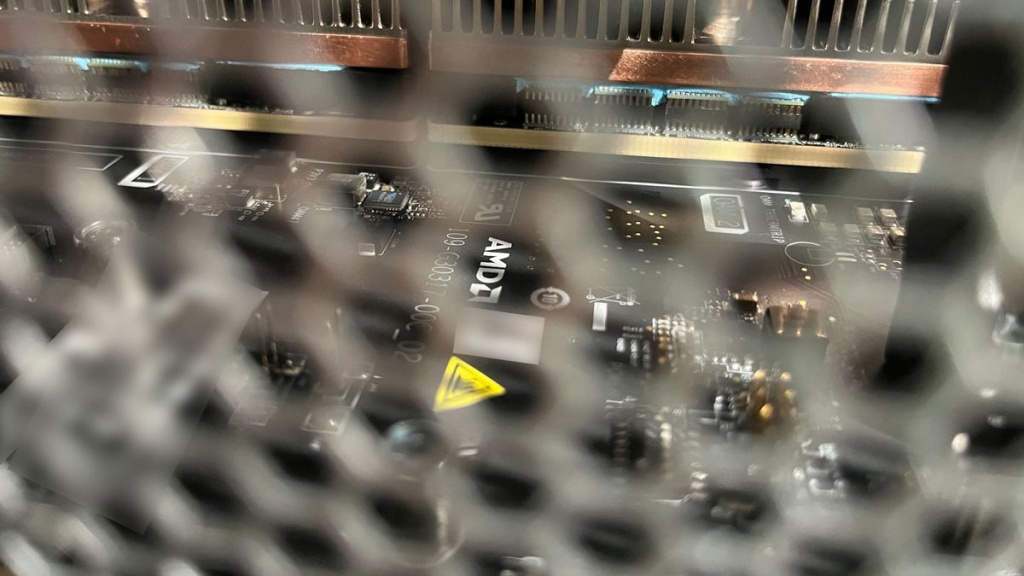

According to Sharon Zhou, CEO of LaminiAI, as reported by Tom’s Hardware, AMD has started shipping its Instinct MI300X GPUs designed for artificial intelligence and for so-called high-performance computing (HPC) tasks. “First AMD MI300X live in use” Zhou wrote. He posted a screenshot showing the 8-way AMD Instinct MI300X in action.

LamniAI intends to use the AMD Instinct MI300X to accelerate large language models (LLMs) for enterprise applications. Although AMD has been delivering its solutions from the Instinct MI300 series to clients from the supercomputer domain for some time and expects them to become the fastest billion-dollar products in history, it has now started delivering Instinct MI300X GPU solutions. LaminiAI, as a company that already has a partnership with AMD, certainly has priority access to new hardware.

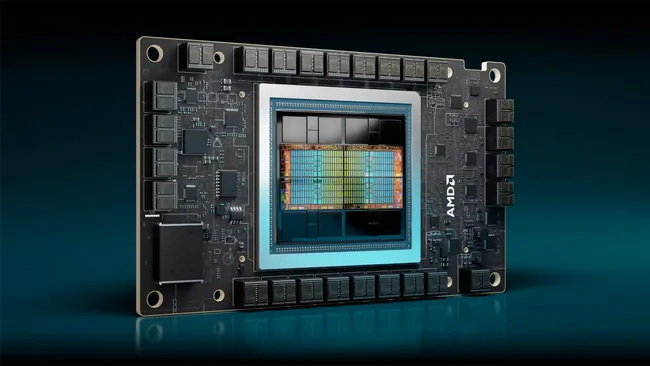

AMD Instinct MI300X is a “cousin” of their Instinct MI300A, the first industrial data center certified solution that uses both x86 general-purpose CPU cores and CDNA 3-based highly-parallelized compute cores for AI and HPC use. Unlike the Instinct MI300A, the Instinct MI300X does not have x86 cores, but it has more CDNA 3 chiplets (a total of 304 compute units compared to 228 in the MI300A) and therefore offers higher performance for AI and HPC. In addition, the Instinct MI300X comes with 192 GB of HBM3 memory with a maximum throughput of 5.3 TB/s.

Sharon Zhou, CEO of Lamini AI

Sharon Zhou, CEO of Lamini AIBased on these numbers, the AMD Instinct MI300X should outperform the Nvidia H100 80GB solution, which is already available and used massively in solutions from companies such as Google, Meta (Facebook) and Microsoft. The Instinct MI300X is actually a competitor to the Nvidia H200 141GB GPU, which is yet to hit the market.

According to previous reports, Meta and Microsoft have ordered significant quantities of AMD Instinct MI300 series products. However, LaminiAI is the first company to confirm the start of use of the Instinct MI300X.