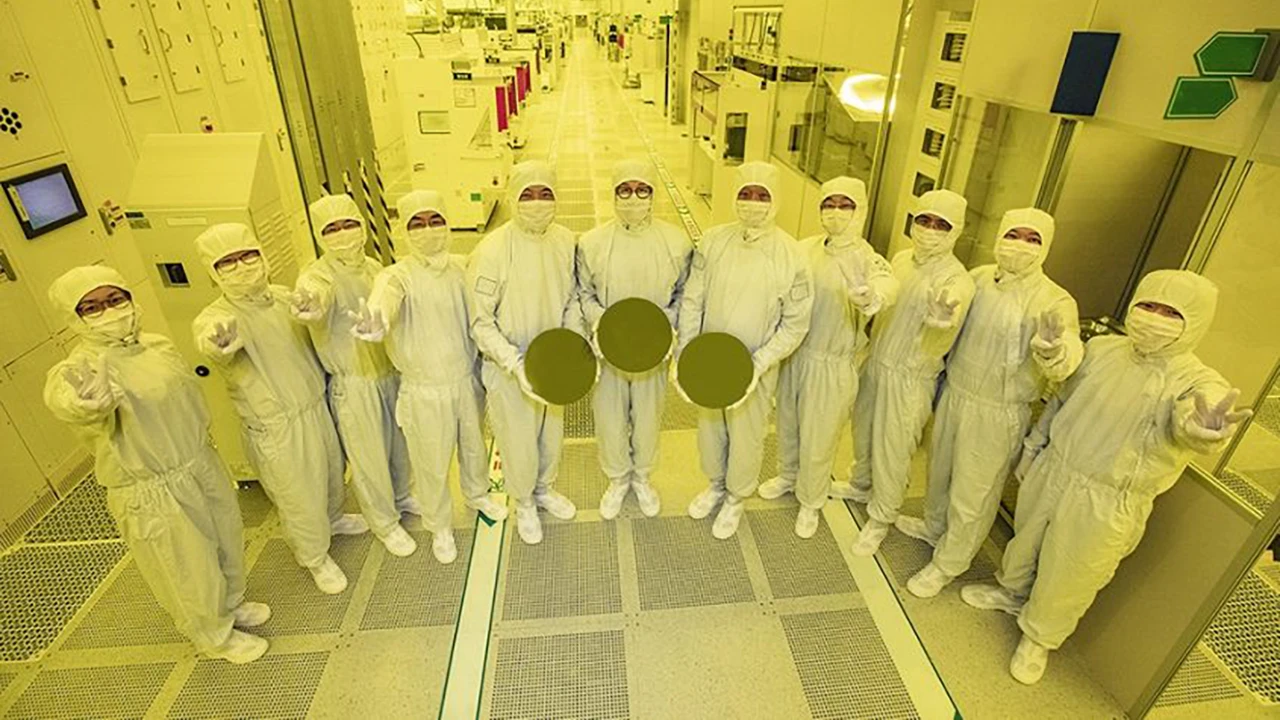

Samsung developed an AI chip in collaboration with Naver, claims to be 8 times more efficient than NVIDIA’s best semiconductor

It looks like a partnership Samsung i Content in the development of chips for hyperscale You have models is already giving results, this time it’s an advanced AI chip.

They claim that their solution with FPGA is even eight times more efficient in power consumption than anything made by NVIDIA. This is a bold claim that will certainly be put to the test when independent parties get a chance to take a closer look at this AI chip.

According to the two companies, this kind of performance comes in part from the way Low-Power Double Data Rate (LPDDR) DRAM is integrated into the AI chip. However, there is little detailed information on exactly how LPDDR DRAM it’s working inside this new chip that can lead to such drastic performance improvements.

The new AI chip is powered by the HyperCLOVA language model

HyperCLOVA, a hyperscale language model developed by Naver, plays a key role in these results. Naver says it continues to work on improving this model, hoping to achieve greater efficiency by improving compression algorithms and simplifying the model. HyperCLOVA currently has over 200 billion parameters.

Shutterstock

ShutterstockSuk Geun Chung, Director of Naver CLOVA CIC, stated: “By combining our knowledge and skills gained from HyperCLOVA with Samsung’s semiconductor manufacturing capabilities, we believe we can create an entirely new class of solutions that can better address the challenges of today’s AI technologies.,” he reports Korean Business.

It’s certainly no surprise to see another successful partnership in the AI chip business because NVIDIA is making a ton of money right now with its AI products. Having more options will be a good thing for developers who want to work with AI, but ultimately for the end user as well. It remains to be seen when and in which products this AI chip will be found.